Blogs

Join the

Revolution

K-Nearest Neighbor (KNN) Algorithm

By Yashodhan Omase

Introduction

- K-Nearest Neighbor is one of the simplest Machine Learning algorithms based on Supervised Learning technique.

- K-NN algorithm can be used for Regression purpose as well as for Classification purpose.

- KNN does not make any assumption hence KNN called as a non-parametric algorithm.

- KNN does not learn from the training dataset instead of training KNN stores the dataset and at the time of classification, KNN performs an action on the stored dataset hence KNN called as a lazy learner algorithm.

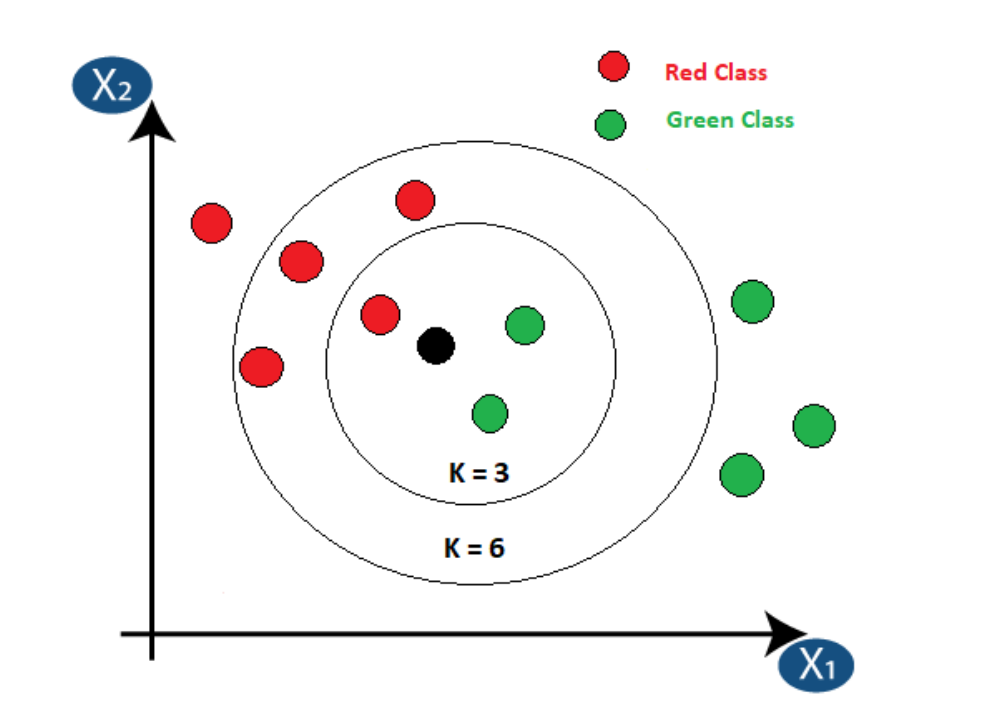

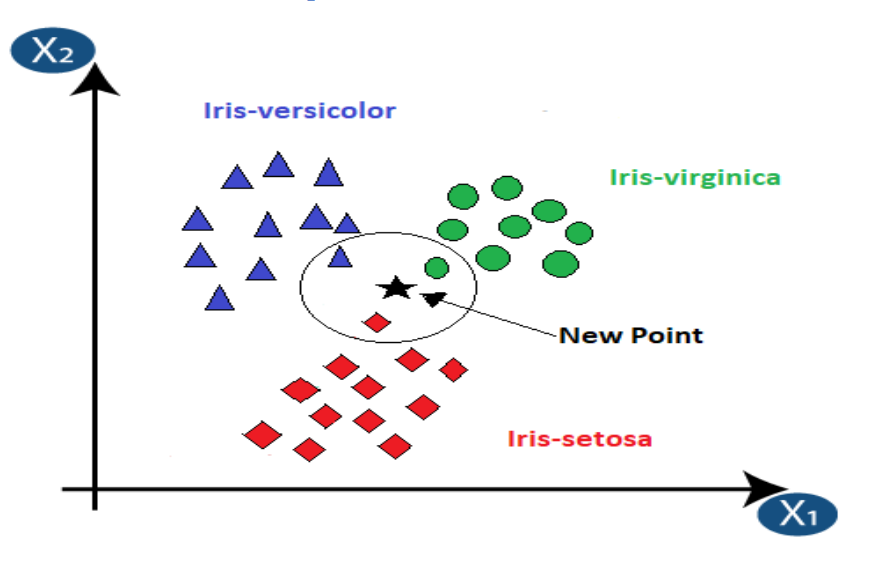

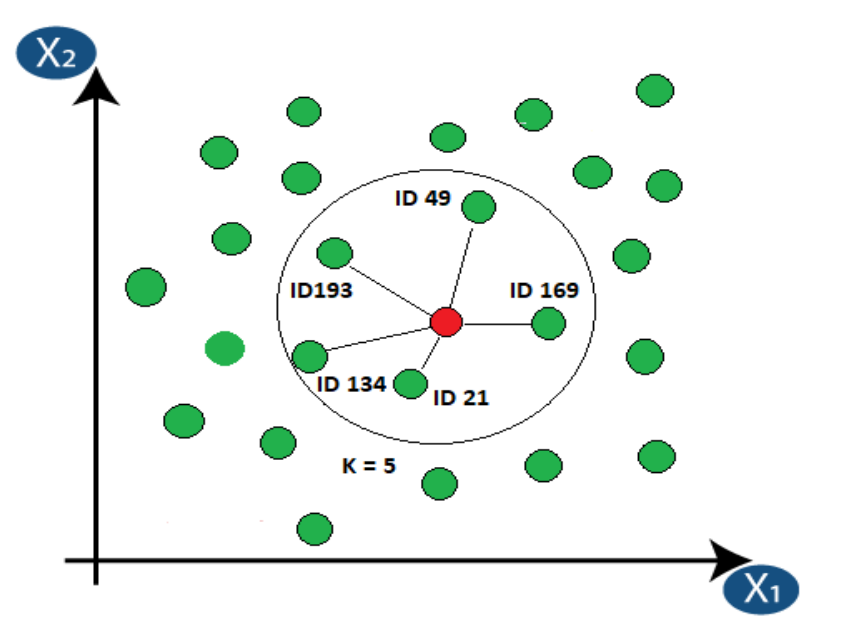

- Suppose we have two classes red class and green class both having 5 points and black point is our new point which want to classify.

- First, we calculate 3 nearest neighbors from black point out of 3 neighbors maximum two belongs to green class hence our new point is classified into green class.

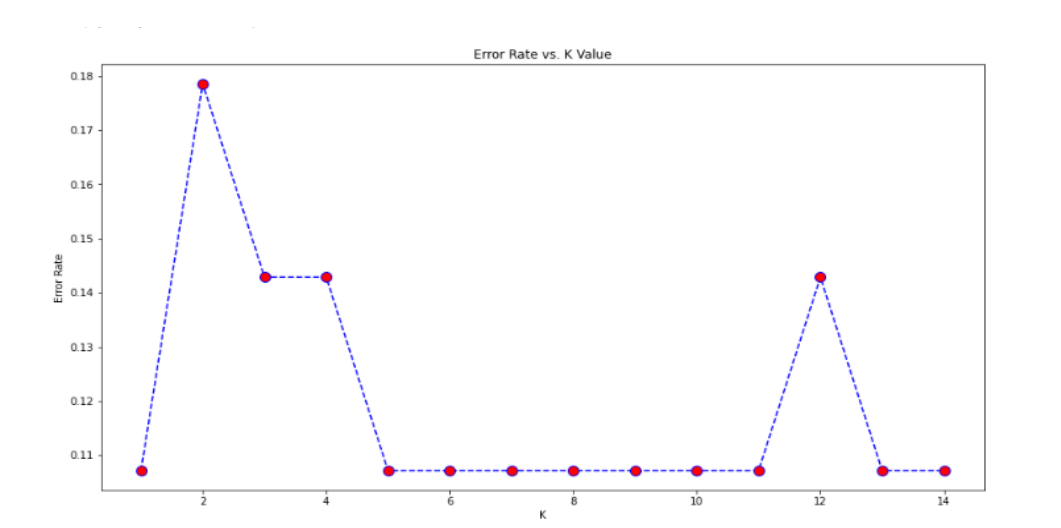

How to select the value of K?

KNN For Classification

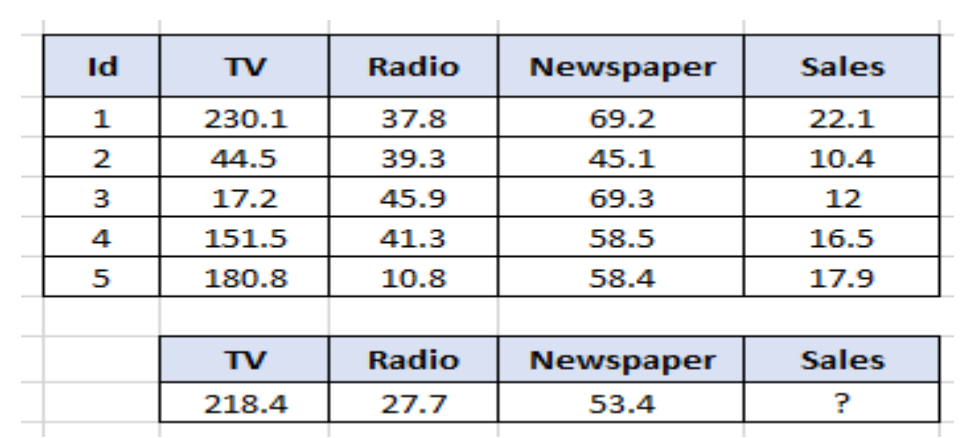

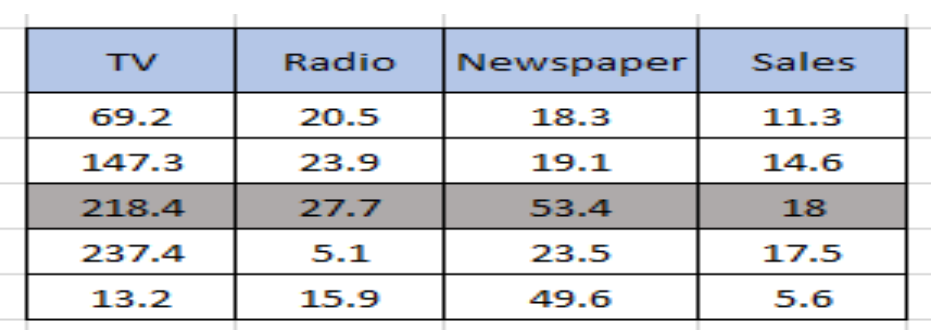

KNN For Regression

Industrial Application of KNN Algorithm

Diabetics Prediction

Lung Cancer Prediction

Recommendation System

Advantages of KNN Algorithm

Disadvantages of KNN Algorithm

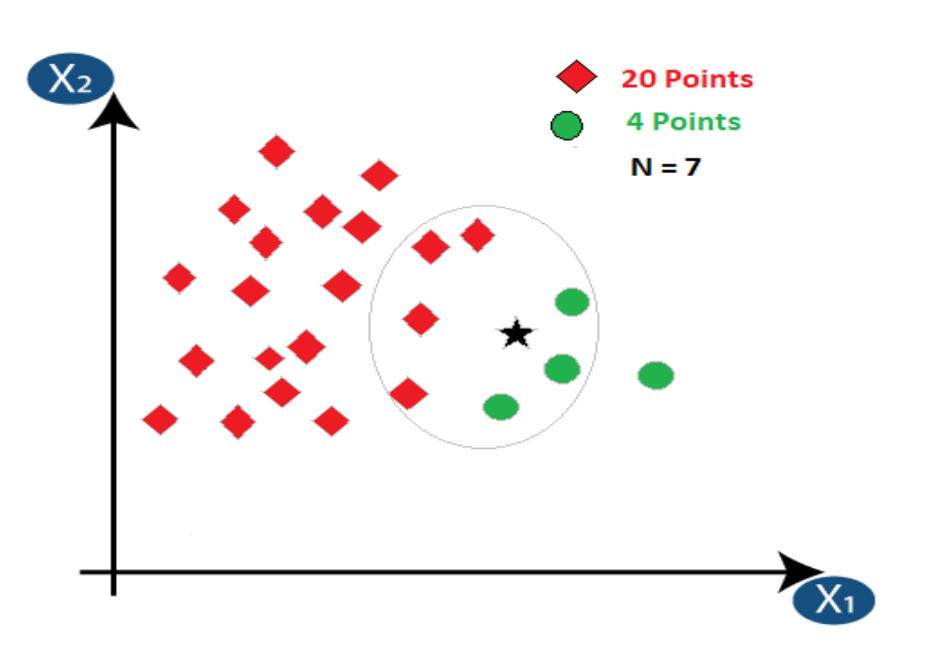

Effect of Imbalanced Data

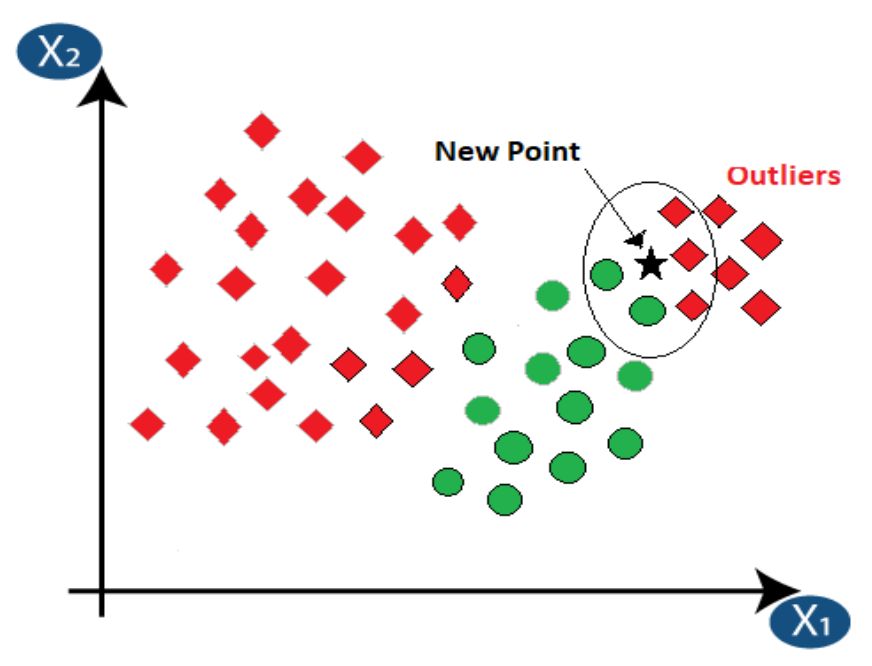

Effect of Outliers